Sentence Embedding Models for Finding TV Show Quotes

Disclaimer:

Some of the content in this blog post is not created by me but quotes from popular TV shows, I mean in no way to copyright this content, and am simply showing as the result of a fun project.

If someone gets on my case about this just remember -

"I don't own this"

Michael: Oh God!

Toby: That is not mine. I have never seen that before.

The Office - Frame Toby · Season 5, Episode 9

The problem. I am a big fan of comedy TV. I sometimes have cases where I am talking with friends and we joke about a great line in some TV show or other. It sometimes bothers me if the quote isn't quite right, so I then spend time either finding the episode (which sometimes I do not know) or scrolling through scripts online.

"There must be a better way to do this",

[Scene: Monica and Chandler's, Joey and Chandler are still working on the door.]

Chandler: There has got to be a way!

Joey: Easy there Captain Kirk. Oh, do you have a bobby pin?

Friends - The Secret Closet • Season 8, Episode 14

I think.

Sentence Embedding Models

The idea behind this project is to use sentence embedding models. The actual mechanisms behind these models can take a bit of understanding, but for this post, you don't need any technical depth, and I summarise the main points below. If you are interested in slightly more detail I have written a brief introduction to sentence embedding models which introduces the concepts more rigorously than here.

The core idea is that sentence embedding models take a sequence of text as input (e.g. "The sun was hot.") and maps it to a real-valued vector (of the order thousands of dimensions). The model has been trained to map semantically similar sentences to similar vector spaces. Dissimilar sentences are mapped to be far apart in the vector space.

Case Study: Quote Finder for TV Shows

The approach. If we can encode all quotes from a given TV show using a sentence embedding model, we have a separate vector for all quotes which we can save in some vectro database. In principle, we can then perform a vector search with respect to a query vector generated from our own input text using the same embedding model. In essence, we can search the TV show for quotes that semantically match our example!

The basis of this idea is how Retrieval Augmented Generation (RAG) works! The user's prompt is embedded, and a large corpus is searched against and the most relevant text is returned to modify the prompt.

The pipeline is surprisingly quick and easy. We first scrape TV show scripts from the internet, clean them up and produce a consistent format, that might look something like this:

# 05x04 - Baby Shower

# Episode: 05x04

# Title: Baby Shower

# URL: https://transcripts.foreverdreaming.org/viewtopic.php?t=25374&view=print

# Episode ID: 25374

Dwight: [looking pregnant] Hey Michael?

Michael: Yeah.

Dwight: Contractions are coming every ten minutes.

Michael: OK, just remember to keep breathing.

Dwight: My cervix is ripening.

Michael: OK, good.

We then create metadata which stores individual chunks of our scripts:

{

"source_file": "dunder_mifflin_infinity__parts_1_2_s04e03_04_56.txt",

"chunk_id": 596,

"text": " WHERE ARE THE TURTLES? WHERE ARE THEY?",

"character": "Michael",

"episode_title": "Dunder Mifflin Infinity",

"season": 4,

"episode": "3/4",

"episode_string": "s4e3/4"

},

You see a "chunk_id" in the above json entry. A chunk is some amount of text data we allow our model to embed. As we

"increase the size of a chunk"

Pam: Do you have something in your pocket?

Michael: ...Chunky. Do you want half?

The Office - Business School • Season 3, Episode 17

, the semantic meaning might change, and indeed if we have a chunk that spans the end and start of two different paragraphs, our sentence embedding model might struggle.

Therefore, how we chunk our data is important. There are many ways that chunking happens in practice, one can either chunk every i new lines (as is done here), or every l strings. We can even use models to semantically chunk data!

For every chunk, we run our model, get a nice vector and

"store in a database"

Andy Dwyer: The shoeshine stand is gone. Or maybe it was never here.

Ron Swanson: They must have moved it during the remodel. It's probably in basement storage. To the basement!

Parks and Recreation - Pie-Mary • Season 7, Episode 9

. For this project, I use the FAISS library and store vectors locally in .bin files.

The pipeline then works like so:

1) Pass input text into our sentence embedding model (either using an API or from a model downloaded locally)

2) Use FAISS to perform vector search. FAISS has the option for a few different algorithms, but the default does something like determine the smallest L2 distances. Alternatively, the vectors can be normalised so that L2 distance is equivalent to cosine similarity.

3) Produce a nicely formatted json file with the results. The json entry may have attributes such as "episode", "character" etc.

4) This data can either be printed normally in a terminal, or you could create a tool and allow LLMs to call the tool, for example, using Model Context Protocol (MCP), see later.

The results. We can now (provided we have extracted the scripts and formatted correctly) semantically search quotes from TV shows! I have dotted some examples throughout the blog to give you an idea of how it works. Suprsingly, I didn't actually look into this because I needed a more efficient way to check TV show quotes. This case study is just a nice example that illustrates a use case of sentence embedding models. If you wanted to be really efficient and quirky you could start writing emails purely from show quotes:

Instead of

"Hi Alex,

I hope you’re well. I just wanted to follow up on our discussion from earlier this week regarding the project timeline.

Could you confirm whether the revised milestones still work on your side?

If helpful, I’m happy to jump on a quick call to align.

Best regards,

Matt"

How about

"Hi Alex,

Have you had a chance to look over the revisions on the contract I've prepared for you?

If you ever need any help, I am just a phone call away.

Best regards,

Matt"

Which are quotes from

"Angela"

Dwight: [on the phone] Hey, what's up, kid?

Angela: Have you had a chance to look over the revisions on the contract I've prepared for you?

Dwight: Nothing left to do except dot the I's, the J's, and the umlauts. Why don't you meet me here at exactly mid-late afternoon?

The Office - The Delivery • Season 6, Episode 17/18

and

"Michael"

Pam: Yeah, he tried to set up my TIVO for me but then I didn't have audio for a week.

Michael: If you ever need any help, I am just a phone call away.

Jan: I bet you are.

The Office - Dinner Party • Season 4, Episode 13

, in The Office.

I mentioned MCP earlier. Briefly, if you are not sure what I mean by MCP, it's a standardised protocol to allow your tool calling/resource functions (functions you write, that allow LLMs to call them and use the results during inference) to be wrapped in a standardised way to allow streamlined use with other language models, or sharing of the function etc.

Tool calling is slightly different from RAG, which I also mentioned earlier, as tool calling happens during inference, whereas RAG is conventionally used to give an LLM a stack of context from which to create a response (this still happens during inference, I suppose, but before the model has started responding).

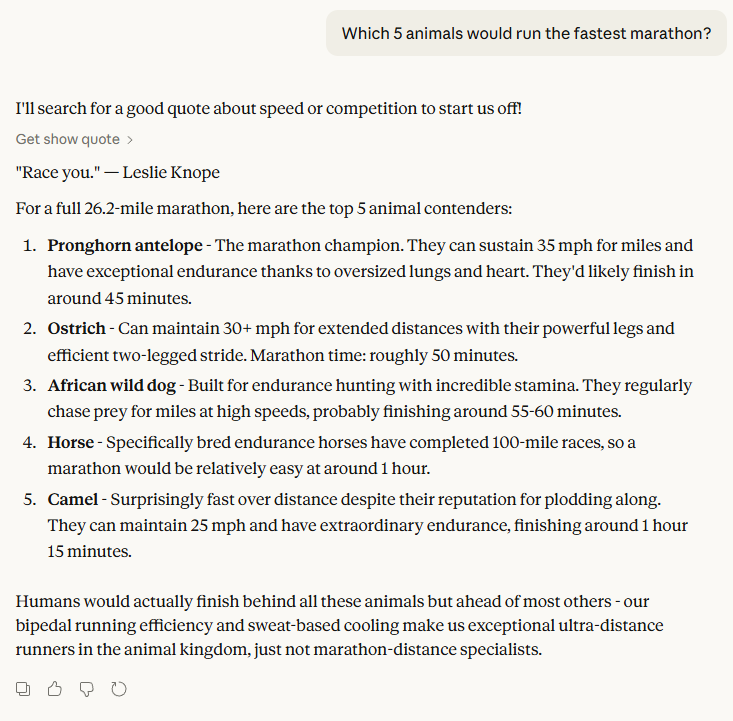

I wrapped the code to produce an MCP tool. LLMs like Claude can now use quote finder during normal use! I show an example below.

Without a web call, this isn't a bad stab from Sonnet 4.5, but what is fun is the quote from Leslie Knope from Parks and Recreation. You can expand the "Get show quote" for the top 5 results, along with the metadata.

Closing Thoughts

I'm sharing the GitHub repo I created for this project: quote_finder. Note, this code works for my own case. You might extract different metadata from TV shows depending on your preferences. The code should probably be used more as a guide; I suggest dropping the entire codebase into an LLM and refactoring it for your particular set up.